When ERA Sciences advise on test exception management for protocol execution issues, encountered during validation of on-premise, hosted or even SAAS systems the same advice applies, you must have

1. Clarity

2. Follow your QMS and procedural requirements (or your vendors QMS if the validation is completely outsourced)

I could probably have listed these in reverse order but we generally find clarity the biggest issue.

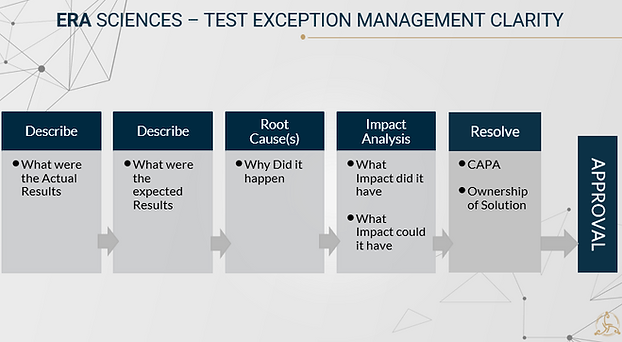

1. Clarity - this part should be easy

-

what actually happened

-

what should have happened

-

why did it happen - root cause(s)

-

what impact did it have/ could it have

-

how to solve the problem (Immediate and/or long term fix)

-

who will solve the problem

-

if the problem can be solved do we need to run the test again (most likely yes)

-

the problem cannot be solved but the business have agreed that this will not have an impact on the system, process etc and no need to do a second or third test run

-

the problem cannot be solved and will have an impact - critical business decision of go no go with the process, system etc until a solution can be found

-

-

resolution outcome confirmed, documented

-

approvals

Now this probably sounds quite straightforward but often when a test exception is required it is seen somewhat of an Art and experience and quality oversight are extremely important -what we don't want to happen is a shell game where, whether intentionally or unintentionally the test exception record becomes 'The Art of Deception'

Many organisations struggle to record clearly 'the what actually happened' distinct from the 'why it happened'.

- the tester should record the what happened first 'Actual Result', and then the what should have happened 'Expected Result', the why is often an unknown at this stage so until a level of root cause analysis is performed keep it separate and distinct. There is always a danger of assuming the root cause and also the impact and where systems have for example a level of configuration with workflows built on shared or common forms the impact could be wider than assumed - the technical team needs to be involved and quality oversight must be applied.

When I read what happened I don't want to read what should have happened in the same sentence, keep it factual and clear. This should be easy each test case being executed should have clear instructions and step(s). Each step or individual test must have clearly defined acceptance criteria (Expected Result) so if you don't get that result the test step fails.

In the case of manual testing a tester should never make an impact decision and decide that the fail is insignificant and continue on without discussing with the validation team. The test discrepancy will need to be documented irrespective of impact.

In the case of automated testing depending on how the test scripts are written and the acceptance criteria applied the testing may automatically stop and a failure error is generated or continue on to completion with all failures recorded. Either halted due to the failure error or completing the full run of tests will still require all failures to be investigated and exceptions documented. Deviation summaries are generated automatically and this can often be a selling point of SAAS and hosted systems.

Last year it was great to get additional insight into how testers manage test failures during a very interesting conference on Selenium a free (open-source) automated testing framework used to validate web applications across different browsers and platforms. Even reading some of the Q/A session replies gave me a better understanding of how their test exception process works.

2. What does your QMS require? Is this a Deviation or a Test Exception or Test Event or Test Failure

Some of you reading this post may use the term test discrepancy or test deviation and be somewhat confused by my use of test exception, the terminology is typically defined at a procedural level in your QMS

The advice on this one is even simpler: it should be clear from your SOPs whether this is a deviation or test exception or event. Different quality organisations have their own documented ways of managing and documenting 'when things go wrong' so I can't tell you, 'you' should tell you. And this comes back to clarity point 1 above, an SOP or work instruction or approved non procedural document should describe clearly what needs to be documented and how it needs to be documented in the exception /deviation record that you will need to generate. And most importantly the test plan or validation plan or even the protocol itself should clearly define the 'what to do' if a test failure occurs.

In general validation test exceptions relate directly to a test which has been executed with clearly defined test objectives and expected results and these have not been achieved - test result not as Expected. In this case the test has Failed and an Exception needs to be documented. Failures can have a huge impact, limited impact or even no impact on the process, workflow, system being tested so it is very important to capture impact in the test exception report. Drawing the hardest line possible here if a failure has a potential or actual GxP Impact then it should never be considered/categorized as no impact.

Validation of any computerised system should be based on intended use, knowledge of intended performance, and capability to confirm the suitability for its intended use. When it comes to impact of an exception or test failure the team decision is very important and the business process owner and system owner need to fully understand the impact.

Exception Criticality and Risk

Validation deviations or exceptions are not to be feared, with so many test cases, test steps, system configuration dependencies they are really part of 'business as usual'. In fact if I review test protocols and see no errors or no deviations I am likely to expect some kind of 'other' issue. As you read this final section you may be thinking about deviation classification and have I purposely neglected to mention it - the answer is No. I have left this until last because within your QMS you most likely have categorised deviations as critical, non critical and simple and even included examples of each for the employees.

Each company should 'set up their stall' so to speak and it should be clear to the validation team prior to testing commencement what constitutes a failure in each of these criticality categories. This makes the impact decision much easier and will ultimately determine a go/no go decision for a system.

Test yourself Example: Failure of a test step due to entry error by a tester causes a batch yield calculation to pass , however the test step expected result was a fail.

This might be resolved by re-running the test with the correctly entered values, and screen shot evidence generated to confirm the test generated the expected result when performed correctly.

But is this a simple deviation? Work through 1. Clarity and 2. Know your own QMS and procedures and see what you would decide.

If you are interested in getting more information on this or any other DI topic please contact us at CONTACT US | ERA Sciences

Comments