'Not all data is created equal' has become a much used phrase but for those in the Life Sciences and MedTech sector and those committed to manufacture of safe, reliable and effective high quality products this phrase carries significant weight and requires us to have a clearly defined risk management approach to the data lifecycle. If you own the data you must understand it's significance, criticality and risk and you must protect it from disaster.

As we continue to transition away from more paper based GxP records and systems to electronic systems with ability to create, manage, transform, process etc electronic data we open up what some consider 'a Pandora's Box' of backup and restore activities and challenges.

IT Departments have been performing backup and restore on non GxP data for years. So where do the challenges and unexpected risks or blind spots come from with our GxP data?

At ERA Sciences we believe the following points are the biggest challenges to effective backup and restore activities (with the top 3 bulleted points being the main focus of this article).

-

Lack of Common Language across stakeholders

-

Determining what data needs to be backed up (data and metadata)

-

Determining the correct frequency of backup (timely, correct and reliably scheduled)

-

Choosing the optimum Backup and Restore technology solution

-

Ensuring Visibility of Backup and Restore Failures

-

Confirming Ownership and Escalation (less of a challenge, it's normally IT or a system administrator)

-

Adequate Procedural controls

-

Performing Periodic backup/restore challenges

Stakeholders and Common Language

Certainly a big part of the challenge is a lack of common language and understanding among stakeholders. The business know they need their data backed up to 'protect it' in the event of a disaster and to get systems back running and data restored if a disaster does occur.

In general it's the IT Department or a local system administrator who manages or oversees the backup and restore processes and sometimes based on system complexity or resource limitations backup/restore is managed directly by the vendor.

So lets get everyone on the same 'common language' page

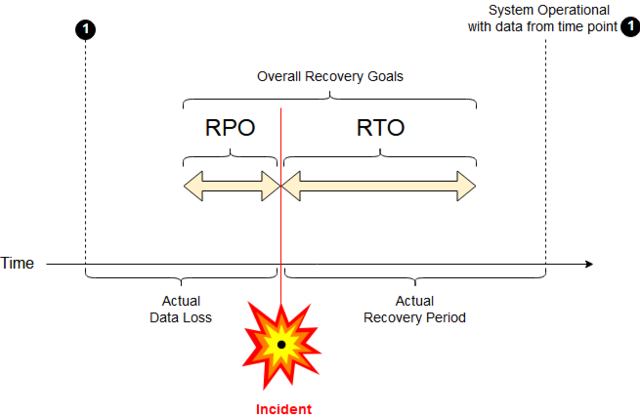

Recovery Point Objective (RPO) - is the amount of data you could afford to lose if a server or database or application has a failure. For example, if you back up your server once a night, your RPO should be 24 Hours, whereas if you replicate your server in real-time your RPO should be seconds.

This RPO can be a hard pill to swallow (excuse the pun) - potentially you could lose up to 24 hours of data if using nightly backups but if backups are only scheduled weekly then that's potentially168 hours of data and this potential loss gets worse the bigger the gap in time between each backup.

So this key RPO decision resides with the business stakeholder, data SMEs and often QA, who need to confirm the criticality of the data in question and the frequency of data change - both of these factors should directly influence the scheduling of backups. For systems or applications infrequently used the backup frequency can be much lower so no point in agreeing with IT every system needs an RPO of say 24 hours if this is not the case.

In 2020 Acronis a leading backup solution provider reported that although 90% of companies surveyed are backing up data only 41% do it daily leading to gaps in data available for recovery While nearly 90% of companies are backing up data, only 41% do it daily - Help Net Security- is this risk to data a result of poor decision making or lack of common language and understanding?

PIC/S and many other guidance documents ad regulations advise the location of backups is also critical 'Routine backup copies should be stored in a remote location (physically separated) in the event of disasters' . There are lot's of backup do's/dont's and IT will be aware of them but make sure backup locations are checked on a periodic basis.

Recovery Time Objective (RTO) - is the maximum tolerable length of time that a computer, system, network, or application can be down after a failure or disaster occurs -the time allowed between an unexpected failure or disaster and the resumption of normal operations and service level.

The business stakeholders and data owners must decide on the optimum RTO for their system and data and then IT advise on whether it's achievable.

Another consideration that can lead to an elevated data 'availability' risk: If IT advise that it is easier and more efficient to just back up every system every night you just need to ensure that getting any one system and it's data back is not a monumental task if everything gets backed up in a 'one nightly swoop activity'. This often comes down to what (network) backup solutions/tools are used, what degree of granularity is available when looking at the backed up data and where the final backup location is!.

This is also the point where you should question the difference between nightly backups vs realistic and achievable RTOs. Just because the backups happen nightly it doesn't guarantee an RTO of 24 hours.

IBM have been backing up data for as long as we have been using PCs and you will find additional information on what disasters look like and RTO management at the following link.

RTO (Recovery Time Objective) explained | IBM

So hopefully now RTO and RPO terms are straight forward! but lots of moving parts to get right - infrastructure, firewalls, location of final backup, do mobile hard drives need to be used, how fast does the data and application need to be available (think internal and external audits, think product release and COAs not available, think regulatory impact) Think ALCOA+ Data (Complete, Consistent, Available and Enduring).

Manual vs Automated backups can also be a challenge but the terms themselves need no explanation- automated backups once validated are definitely a less risky method to manage your data and remove the potential for user error to a large degree. Also consideration of the types of backup you employ eg incremental or differential would not be advisable for manual backup processes (too many choices to be made each time).

Validation and Testing

Regulatory requirements around backups are at a glance worded differently but ultimately require that 'the integrity and accuracy of backup data and the ability to restore the data is checked during validation and monitored periodically'.

-

EudraLex - Volume 4 - Good Manufacturing Practice (GMP) guidelines | Public Health (europa.eu) Annex 11 Final 0910 (europa.eu)Annex 11.7.2

-

China GMP 163

-

Brazil GMP 585

-

Data Integrity and Compliance With CGMP Guidance for Industry (fda.gov)

Note: Backups in the context of this blog article refer to backup copies that are created of the live data during normal computer use and temporarily maintained for disaster recovery. FDAs 211.68(b) interprets backups as long term maintained copies (archived copies) and temporary backups for disaster recovery purposes would not satisfy 211.68(b)s requirements.

So no doubt about it, backup and restore is a regulatory requirement for Computerised Systems used for the maintenance of GxP data and you must validate prior to system release that the backup and restore process using the chosen backup solution(s) works end to end, reliably! and that the data/system can be restored to a pre disaster state if a disaster was to occur.

Any change to a backup/restore process post system go live will need to be performed under formal change control.

Validating the backup process is definitely a team effort and may require external vendor inputs for system understanding and data and metadata locations, system configuration and operating system considerations, administration level validation testers and most definitely inhouse IT management.

You also need to think about how you will periodically challenge backup and restore which is also a regulatory requirement AFTER GO LIVE and where the data will be restored to - unlikely to be a live system as you may risk corrupting your data. (not saying it can't be done but for a periodic challenge you may want to create a secondary replicate database or utilise a digital twin)

Another discussion that needs to take place and again where common language applies is whether backups

-

will be incremental, differential or full (see the link below)

-

will be hot or cold or both (Hot -A technique used in data storage and backup that enables a system to perform a routine backup of data, even if the data is being accessed by a user. Cold - no system access allowed)

Types of backup and five backup mistakes to avoid | WeLiveSecurity

IT (and system vendor) will advise based on

1. data type (flat files or in a database) and data and system criticality

2. frequency of system use and impact of downtime to the business

3. internal IT policies

4. backup tools utilised

You may need to create test data over a period of days to challenge correctly (obviously your system isn't actually live yet!)

Whatever the decision the type(s) of backup to be implemented should all be tested during validation, if a weekly cold backup is performed and nightly hot backups these both need to be tested during validation.

Really interested to see if during validation execution 'meeting the target RTO and RPO are test case(s)'?? Let me know comment or contact us on CONTACT US | ERA Sciences

Determining what data needs to be backed up

According to IBM 'IT prioritizes applications and data based on their revenue and risk' and the business must clearly communicate this risk.

When it comes to determining what data and records need to be maintained and backed up this is definitely a business decision and requires understanding the regulations that apply to your industry and specific sector, for example looking at FDAs predicate rule requirements will quickly get you a broad understanding of the 'what and for how long' w.r.t overall record retention, See example below

211.180 General requirements around the what and for how long

(a) Any production, control, or distribution record that is required to be maintained in compliance with this part and is specifically associated with a batch of a drug product shall be retained for at least 1 year after the expiration date of the batch or, in the case of certain OTC drug products lacking expiration dating because they meet the criteria for exemption under § 211.137, 3 years after distribution of the batch.

Now this requirement applies to overall retention but if you keep your records live on a system they must be available, and in the case of a disaster recoverable so backup/restore would then apply to all the live 'maintained' records and archival (separate conversation) to all those records not live but required to be retained for the period example above.

This overall record retention period should not be confused with how long backups are maintained. Most IT departments retain backups for a pre-defined time (must be clearly specified in internal policies and procedures) and then replace them with later backup copies beyond the specified time. 30-90 days is typical of what we have come across at ERA Sciences but it varies across the industry.

But it's not just original data and records that need to be maintained it's also relevant metadata. This has definitely become a more complex ask and will require vendor input and understanding of data storage locations, system configuration setting location(s), log file locations, metadata location(s) such as Audit trails.

Raw data, original records and metadata may all reside in different physical or virtual locations depending on the application and computerised system be it, on premise, hosted, SAAS and diligence must be applied to ensure essentially 'fragmented' data sets are all identified and backed up. The system vendor must be able to comprehensively advise on the exact location of all record and data types. It may be that some systems have internal data storage cards that store a subset of data outside the main database or flat file structure or folders so you need to get technical (and definitely get IT involved).

And once we know what has to be maintained we can then determine what needs a routine backup, the correct scheduling of backups to be performed in order to protect these records and data in the case of a disaster.

The following is not a challenge to backup/restore it's an assumption of understanding across all stakeholders. Whether the data, metadata and records is the live data on the system or the backup copy security and protection controls still apply - access to data should be limited and appropriate based on need and segregation of duties should be respected and enforced. Backed up data is just as important as live data (in the case of disaster it will become your live data) so ALCOA+ applies.

Why data backup and protection is not just insurance | Cibecs : Cibecs

We hope you enjoyed the start of this Blog Series on Data Backup and Restore. Part 2 will be posted in the coming weeks and will discuss

-

Choosing the optimum Backup and Restore technology solution

-

Ensuring Visibility of Backup and Restore Failures

-

Confirming Ownership and Escalation (less of a challenge, it's normally IT or a system administrator)

-

Adequate Procedural controls

-

Performing Periodic backup/restore challenges.

Feel free to comment and contact us if you have any queries on backup/restore , data integrity or would like stakeholder training on this or any of our other data reliability topics. CONTACT US | ERA Sciences

Comments